Intro

This is my academic homepage. My current research interest is on applying NLP, NLU, Deep Learning to language technologies.

I obtained my PhD (in engineering) degree from the University of Melbourne (Australia) in 2019, under the joint supervision of Prof. Trevor Cohn and Assoc. Prof. Reza Haffari. My PhD research lies on Natural Language Processing and Applied Machine Learning, particularly on Deep Learning models (e.g., sequence to sequence learning and inference) applied to neural machine translation.

Prior to coming to Melbourne, I spent ~7 years (2008-2015) in Singapore for studying at National University of Singapore (NUS) (Masters) and then working as a R&D enginneer at HLT department, Institute for Infocomm Research (I2R), A*STAR. Before that, I was a student/teaching & research assistant/lecturer at University of Science, Vietnam National University at Ho Chi Minh City, Vietnam. During this time, I was a research intern at National Institute of Informatics in Tokyo, Japan, working under Dr. Nigel Collier in a bio-text mining project (BioCaster).

Recent Highlights

*** My PhD viva has been completed and my PhD thesis is now available online.

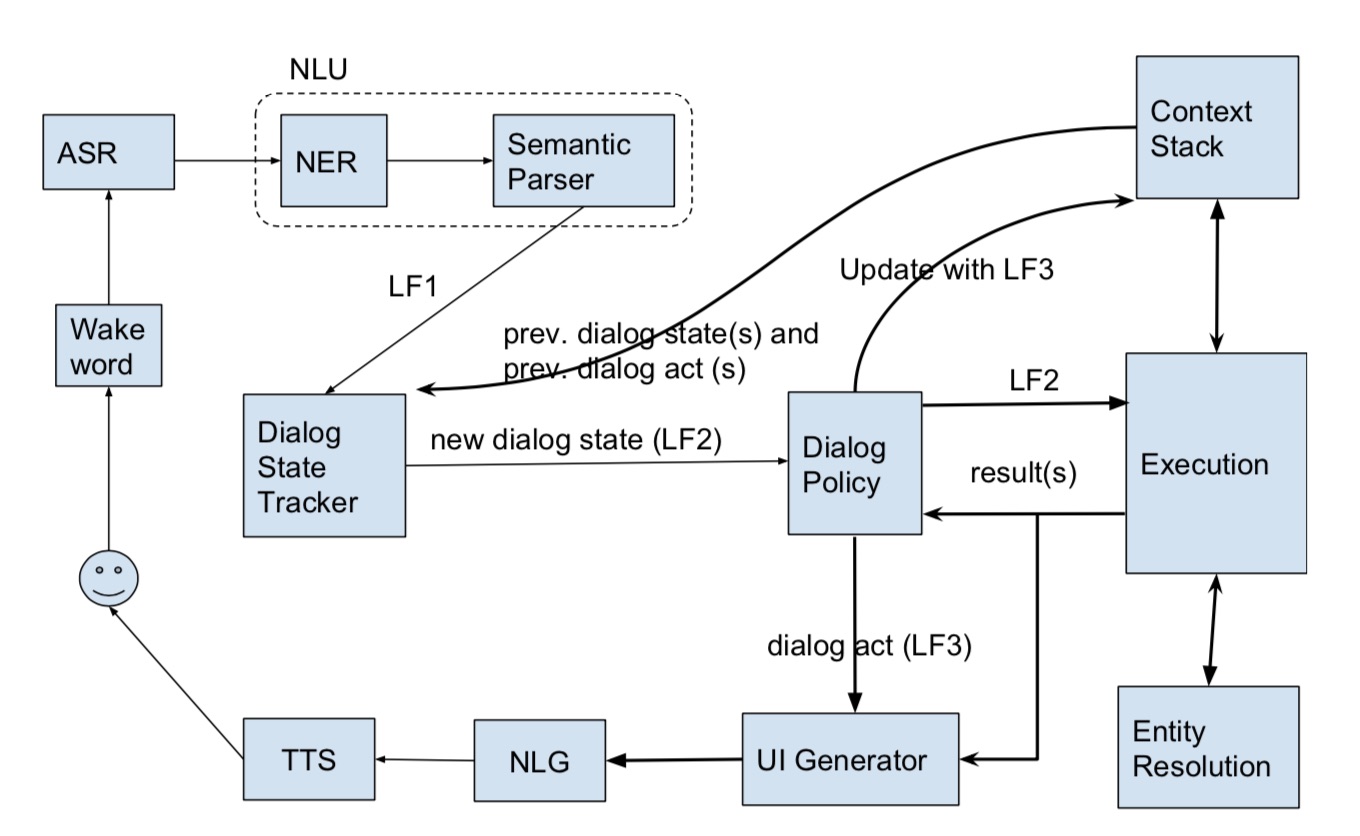

*** On 10 May 2019, I joined Oracle Corporation, working for Oracle Digital Assistant.

*** On 15 Feb 2019, I submitted my thesis for final examination.

*** In Sep 2018, I joined Speak.AI (a new startup company headquartered in WA, USA) as an AI scientist, working on developing solutions for on-device conversational AI. Since May 2019, Speak.AI is a part of Oracle Corporation.

*** I was a research intern at NAVER LABS Europe (formerly as Xerox Research Centre Europe) from Mar 2018 to June 2018, working with Marc Dymetman on the project "Globally-driven Training Techniques for Neural Machine Translation".

PhD Thesis, The University of Melbourne, VIC, Australia. 2019.

Abstract

Long Duong, Vu Cong Duy Hoang, Tuyen Quang Pham, Yu-Heng Hong, Vladislavs Dovgalecs, Guy Bashkansky, Jason Black, Andrew Bleeker, Serge Le Huitouze, Mark Johnson. In Proceedings of Annual Meeting of the Association for Computational Linguistics (ACL-19) (System Demonstrations), 2019.

Abstract Video

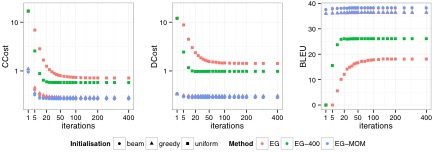

Cong Duy Vu Hoang, Ioan Calapodescu, Marc Dymetman. In arXiv preprint, 2018.

Abstract

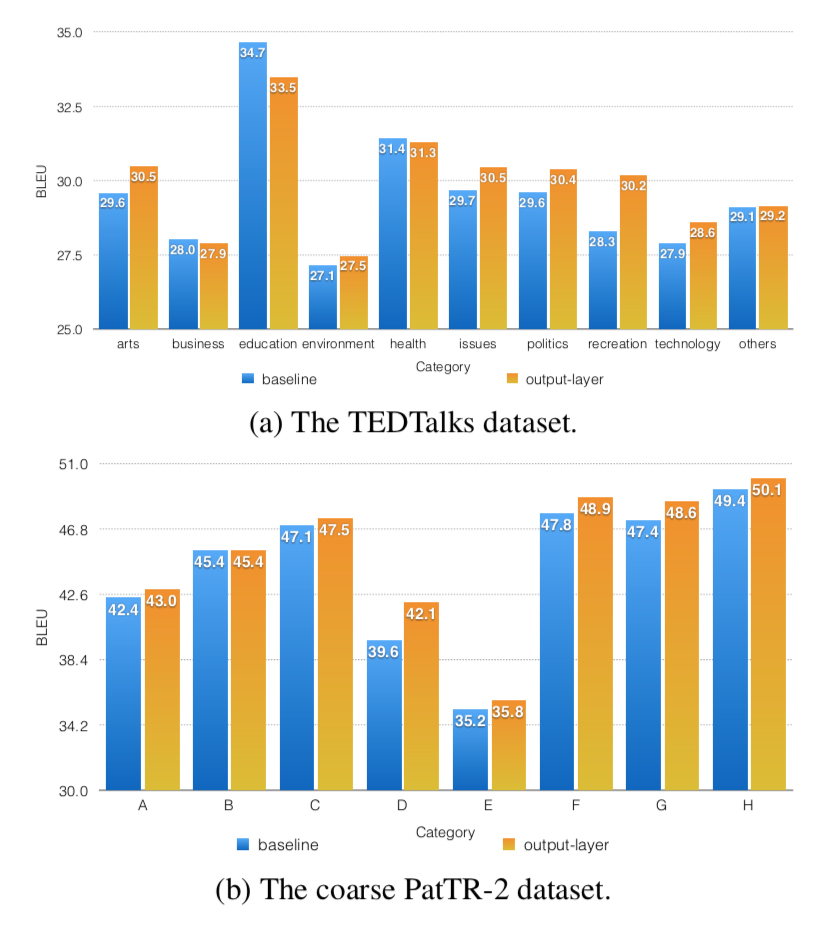

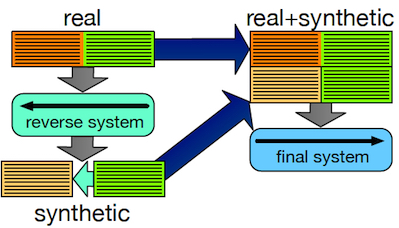

Cong Duy Vu Hoang, Philipp Koehn, Gholamreza Haffari and Trevor Cohn. In Proceedings of The 2nd Workshop on Neural Machine Translation and Generation associated with ACL 2018 (long, poster), 2018.

Abstract

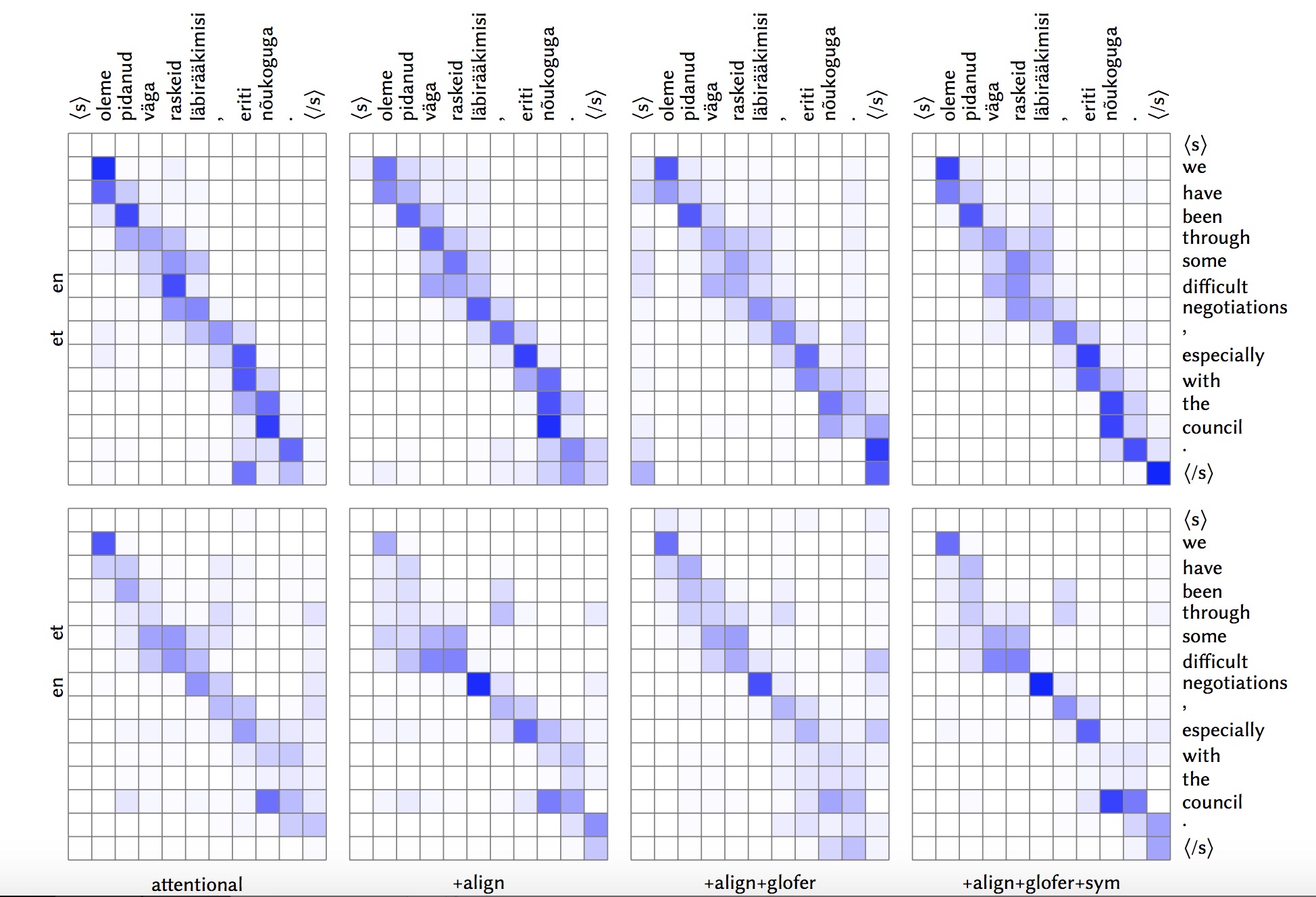

Cong Duy Vu Hoang, Gholamreza Haffari and Trevor Cohn. In Proceedings of The 14th Annual Workshop of The Australasian Language Technology Association (ALTA'16) (long, oral) (best paper award), 2016.

Abstract

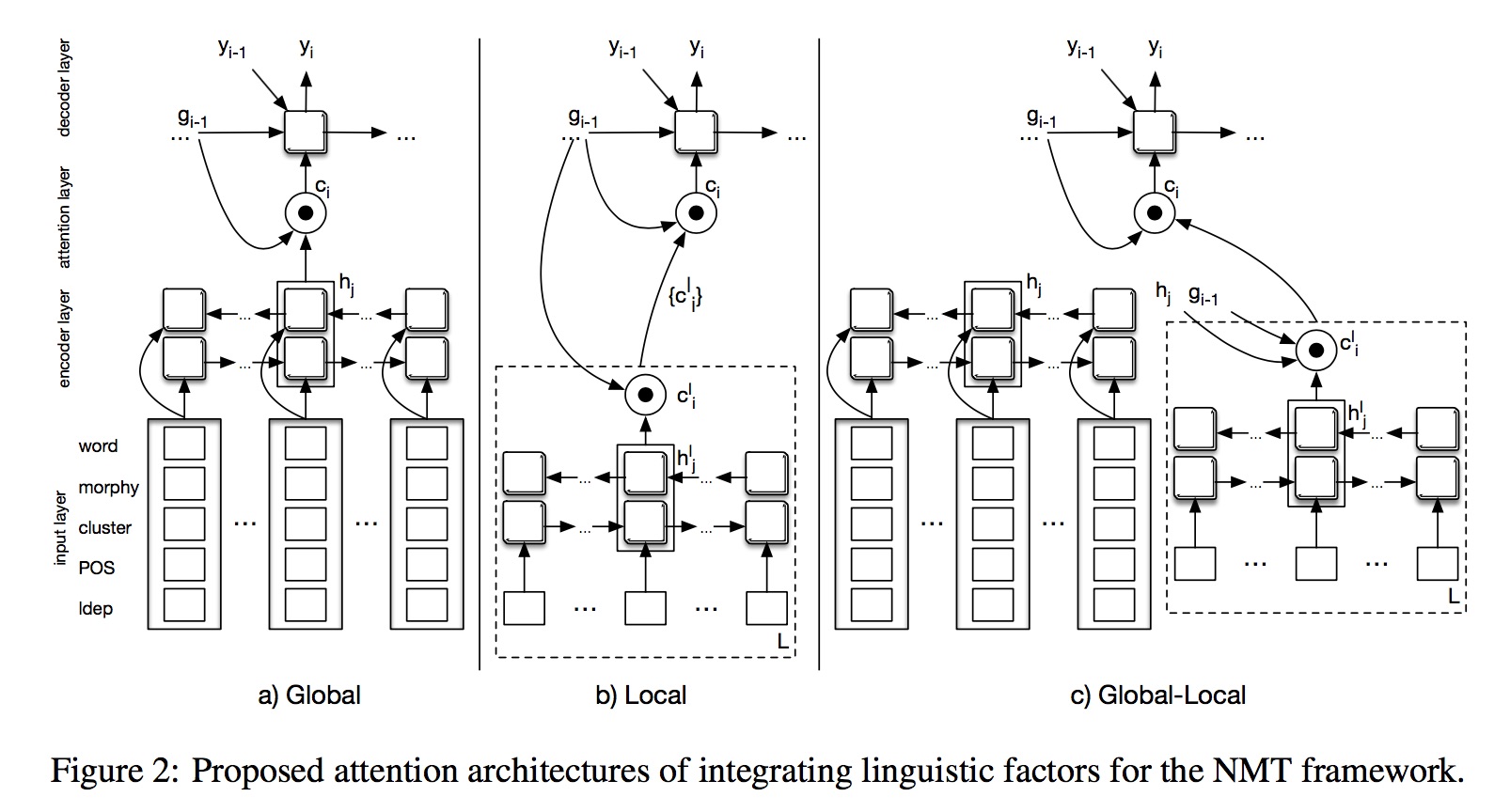

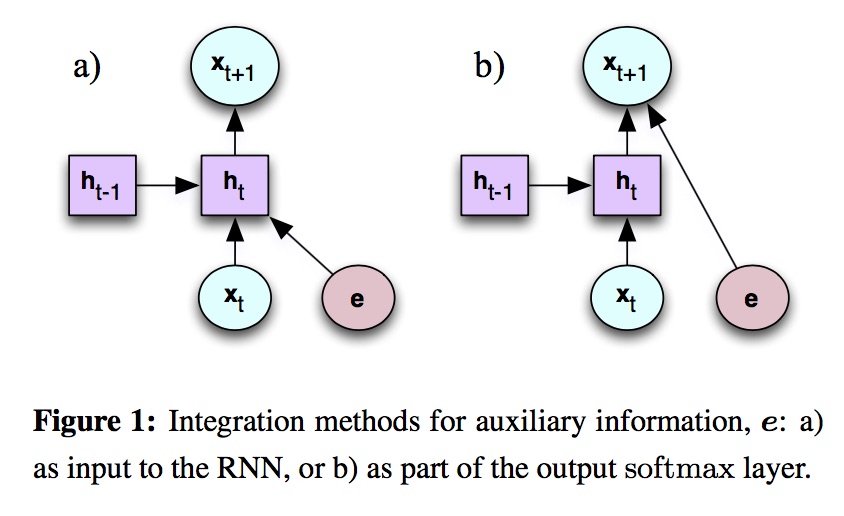

Trevor Cohn, Cong Duy Vu Hoang, Ekaterina Vylomova, Kaisheng Yao, Chris Dyer and Gholamreza Haffari. In Proceedings of The 15th Annual Conference of the North American Chapter of the Association for Computational Linguistics - Human Language Technologies (NAACL-HLT'16) (long), 2016.

Abstract Code

Cong Duy Vu Hoang, Gholamreza Haffari and Trevor Cohn. In Proceedings of The 15th Annual Conference of the North American Chapter of the Association for Computational Linguistics - Human Language Technologies (NAACL-HLT'16) (short), 2016.

Abstract Code